From Ada to AI

December 2025

The team at Coretechs is “all in” on custom software development. Some on the team still remember punched cards and worked in the original Ada Information Clearinghouse, now only remembered within the Internet Archives. The middle tier on the team came of age when the Python and PHP programming languages became ascendant. And the youngest of our crew started out with the latest and greatest like Rust and Elixir. That blending of longer-term memory and cutting-edge technology is why Coretechs is able to deliver the right mix for all of our clients.

In a perfect world everyone might rewrite all their software every five years to take advantage of improvements in languages, coding style and performance enhancements. However, a complete rewrite of an existing system can be extremely labor intensive and expensive. That’s why we focus on working with whatever our clients bring to the table. Whether that’s a brand-new concept where we’re free to develop from scratch with the latest tools, or a 20 year old legacy web-based system written in a language where it’s more challenging to find strong support, Coretechs is able to help. So, to honor that fount of knowledge and blended skills, here’s a brief history of computer programming…

Computer programming began not with silicon chips, but with the visionary insights of Ada Byron Lovelace in the mid-19th century. Working alongside Charles Babbage on his proposed Analytical Engine, Lovelace authored what is widely considered the first machine algorithm. More importantly, she made the conceptual leap that a machine could do more than just crunch numbers; it could manipulate symbols to create music, art, or complex logic. This “poetic science” laid the philosophical foundation for artificial intelligence, framing the computer as a general-purpose tool capable of simulating human-like reasoning, if given the right set of instructions.

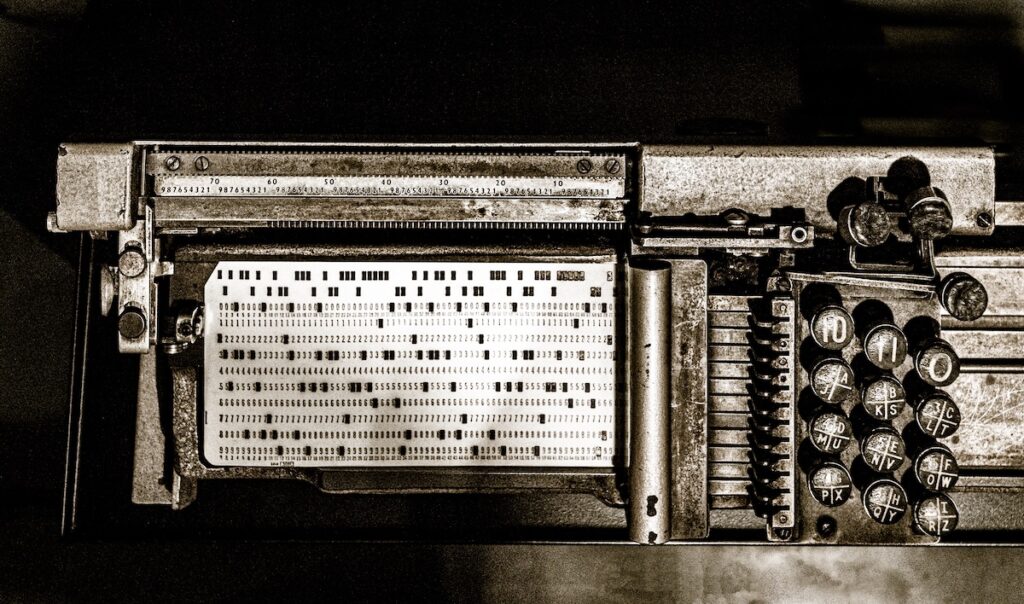

Fast forward as the vacuum tubes of the 1940s gave way to the transistors of the 1950s, the need for more human-readable instructions birthed the first high-level languages. Fortran (Formula Translation), released in 1957, revolutionized scientific computing by allowing engineers to write complex mathematical formulas in a way that resembled algebra rather than machine code. Shortly after, in 1959, Cobol (Common Business-Oriented Language) emerged to handle the burgeoning data needs of the business world. While Fortran conquered the laboratory, Cobol became the backbone of global banking and administrative systems, proving that programming could be tailored to specific professional domains. Believe it or not, Cobol still runs major systems within the US Government such as the IRS and Social Security Administration which only emphasizes how hard it can be to switch to a new programming language once a major system is written in that language.

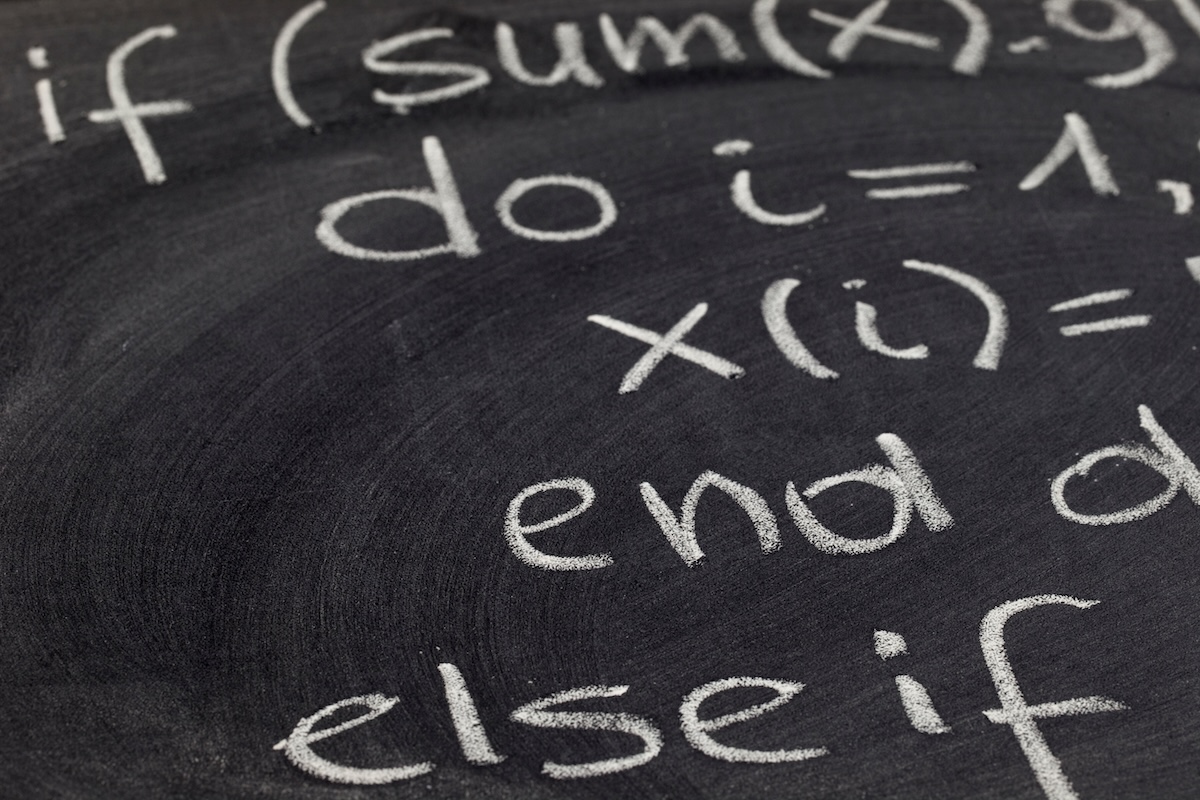

The 1960s and 70s introduced a more theoretical and structured approach to coding. John McCarthy’s Lisp (List Processing), debuting in 1958, became the first true “AI language.” Its unique ability to treat code as data and its support for recursion made it the perfect vehicle for early symbolic AI research and natural language processing. Meanwhile, Pascal arrived in 1970 as a tool for teaching structured programming. By emphasizing clear syntax and rigorous data types, Pascal influenced a generation of developers to prioritize reliability and readability, traits that would eventually be essential for the massive software architectures of the modern era.

As the 1990s ushered in the World Wide Web, the focus of programming shifted toward accessibility and scale. PHP emerged in 1995 as a grassroots solution for dynamic web development, eventually powering the vast majority of the early internet’s interactive sites. Simultaneously, Microsoft introduced the .Net framework (and its flagship language, C#) at the turn of the millennium to provide a robust, “managed” environment for enterprise-level applications. These tools democratized software creation, allowing millions of developers to build and connect systems globally, creating the massive datasets that would later serve as the “fuel” for modern machine learning.

Today, the crown of the AI era belongs to Python. Although it was created in the early 1990s with a focus on simplicity and readability, it has evolved into the lingua franca of data science. Python’s vast ecosystem of libraries—such as TensorFlow and PyTorch—allows developers to build sophisticated neural networks with just a few lines of code. By abstracting the complexity that once required the mathematical rigor of Fortran or the symbolic depth of Lisp, Python has fulfilled Lovelace’s vision of a machine that can “follow analysis” to achieve seemingly intelligent ends.

Reflecting on the path from Ada’s first algorithm to the generative models of today, it is clear that each language was a necessary stepping stone. From the efficiency of Fortran to the enterprise stability of .Net and the web-scale reach of PHP, every era refined our ability to communicate with machines. We have moved from telling computers exactly how to do a task to showing them what we want them to learn. As we move deeper into the age of AI, these foundational languages remain the silent architecture beneath every “intelligent” response, proving that the art of programming is, and always has been, the art of expanding human thought.

If we can help with your development needs from COBOL to Python, from Lisp to AI based development and integration please give us a call at 202 540 0002, drop us a message on our Contact Page OR check out our Pricing Guide page!